Project

Scalable Speech Recognition

How do we implement and efficiently train a centralized deep learning system converting speech to text in a scalable manner?

Abstract:

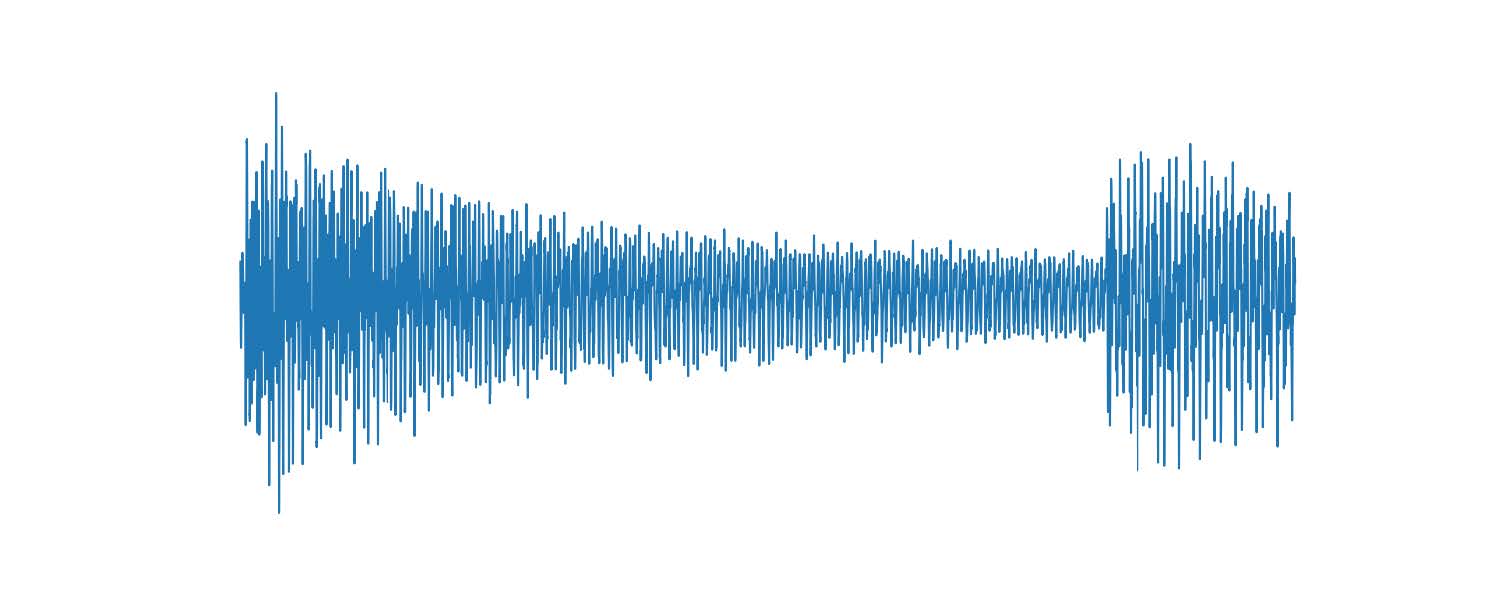

This project focuses on the implementation of an Automatic Speech Recognition (ASR) system converting speech to text. It extracts Mel features, Log Mel features, and Mel-Frequency Cepstral Coefficients (MFCC) from sound and use them to train an Acoustic Model (AM) Deep Neural Network (DNN). The models are trained on two different hardware systems with four GPUs. The training process is benchmarked and optimized. Evaluation of the throughput, latency, and accuracy of the models is done and compared to other ASR systems. The best model implemented has a Word Error Rate (WER) of 10.5 and a latency shorter than the duration of the input making it appropriate for real-time applications.