We build the infrastructure of data science: scalable and efficient systems and applications supporting the data lifecycle, including collection, transfer, storage, processing, analytics and curation.

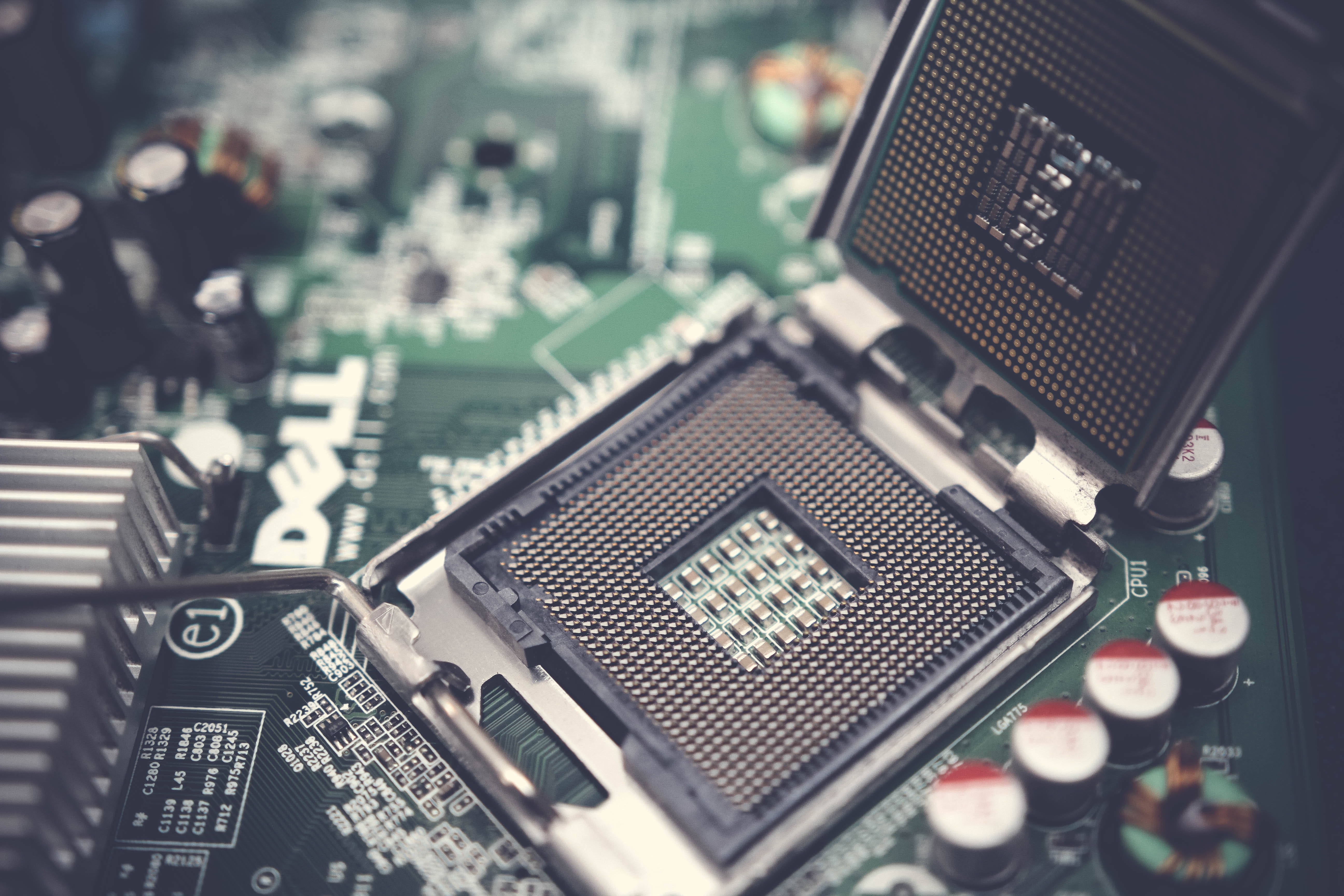

Data science enables forecasts, possibly in real-time, at ever lower cost and better accuracy for the benefit of our society. Today, data scientists are able to collect more data, access that data faster, and apply more complex data analysis than ever. These advances are mainly due to radical hardware evolution combined with a diversification of data systems and the emergence of analytics frameworks, boosting the productivity of data scientists.

Research in our group covers the following topics: